The Tech Behind SyncFused Imaging

We are solving one of Earth Observation's most fundamental challenges.

The Problem of Parallax and Time-Gaps

Traditionally, creating a multi-layered analysis required data from two separate satellites: one with a SAR sensor and one with an optical sensor. This approach introduces unavoidable errors from differences in viewing angle (parallax) and capture time (temporal gaps). For mission-critical applications, this level of uncertainty is unacceptable.

The SyncFusion™ Technology Stack

SyncFusion™ is more than just a sensor; it's an end-to-end system of hardware and software designed to work in perfect harmony.

Hardware Integration

AI-Powered Fusion Algorithms

The Problem of Parallax and Time-Gaps

Traditionally, creating a multi-layered analysis required data from two separate satellites: one with a SAR sensor and one with an optical sensor. This approach introduces unavoidable errors from differences in viewing angle (parallax) and capture time (temporal gaps). For mission-critical applications, this level of uncertainty is unacceptable.

The SyncFusion™ Technology Stack

SyncFusion™ is more than just a sensor; it's an end-to-end system of hardware and software designed to work in perfect harmony.

Hardware Integration

AI-Powered Fusion Algorithms

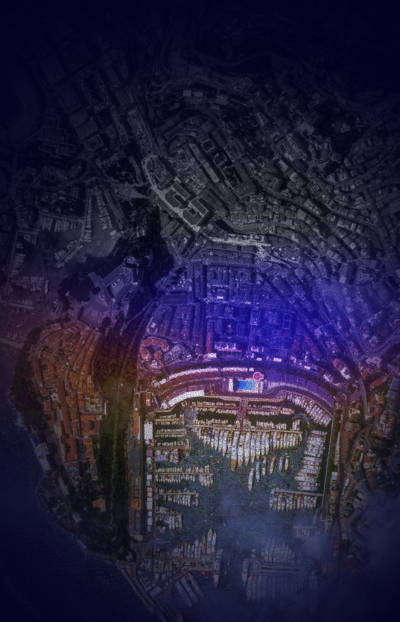

Anatomy of a GalaxEye SyncFused OptoSAR Image

The Optical Layer

Provides intuitive visual context, color, and texture, making the image easy to interpret. But it is obscured by nights, clouds and smoke.

The SAR Layer

Penetrates clouds, darkness, and smoke to reveal structural information, surface texture, and elevation changes. But it's very unintuitive.

The SyncFused™ Image

Combines the clarity of Optical Imaging with the all-weather reliability of SAR. The result is one dataset with complete context and undeniable ground truth, delivered from a single pass.